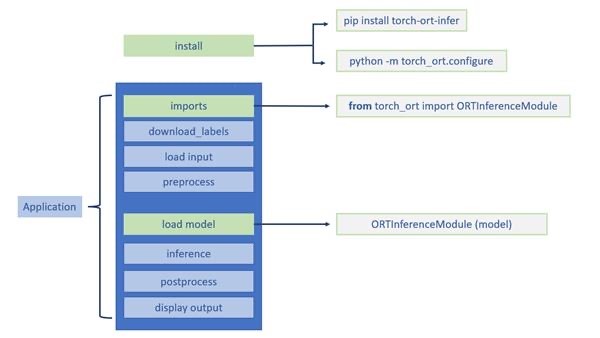

Faster inference for PyTorch models with OpenVINO Integration with Torch-ORT - Microsoft Open Source Blog

Inference mode complains about inplace at torch.mean call, but I don't use inplace · Issue #70177 · pytorch/pytorch · GitHub

Reduce inference costs on Amazon EC2 for PyTorch models with Amazon Elastic Inference | AWS Machine Learning Blog

How to PyTorch in Production. How to avoid most common mistakes in… | by Taras Matsyk | Towards Data Science

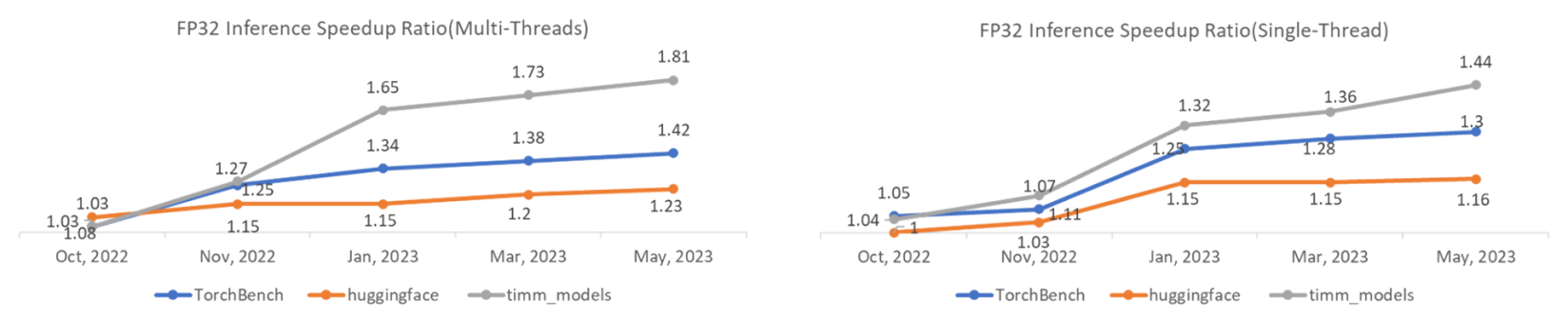

TorchDynamo Update: 1.48x geomean speedup on TorchBench CPU Inference - compiler - PyTorch Dev Discussions

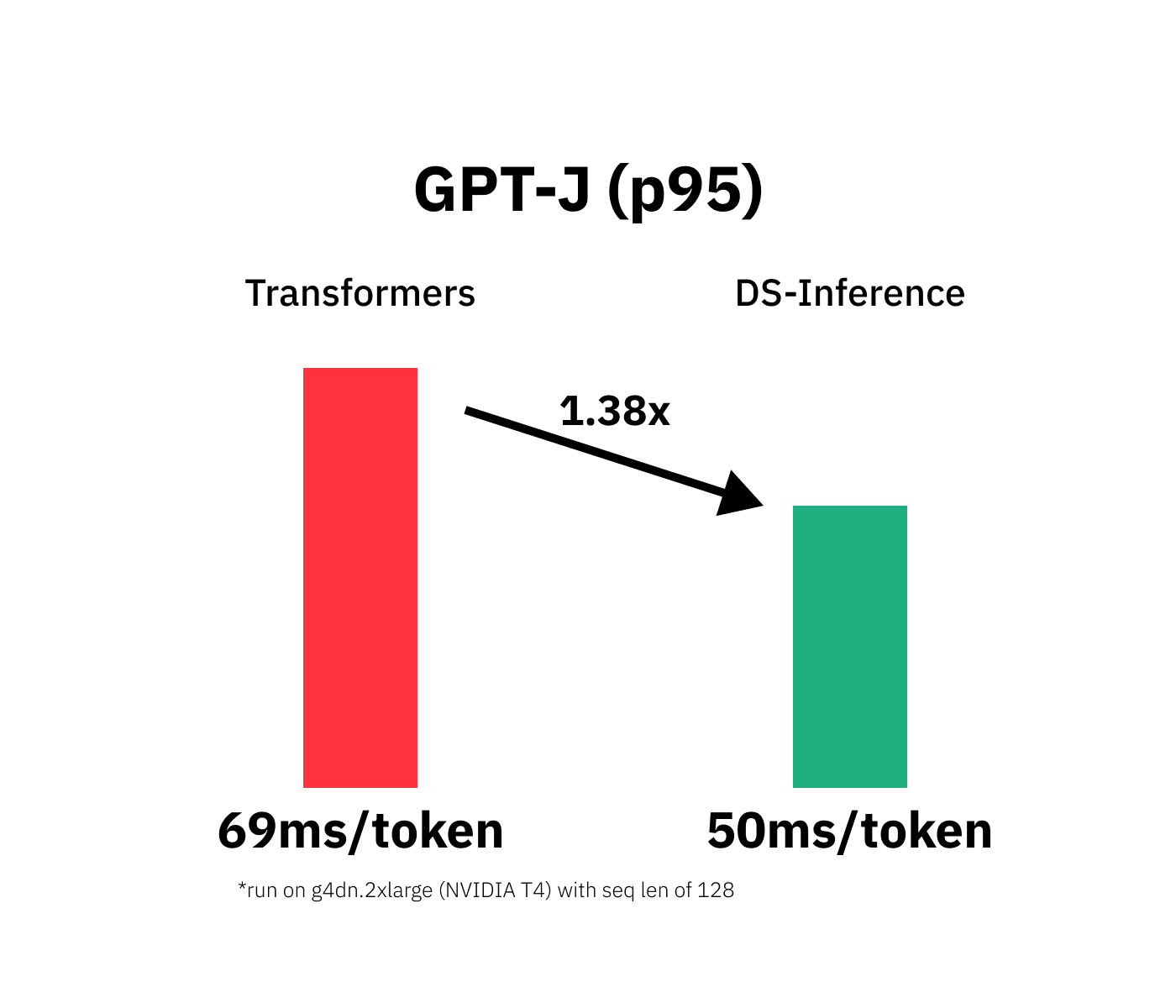

Deployment of Deep Learning models on Genesis Cloud - Deployment techniques for PyTorch models using TensorRT | Genesis Cloud Blog